What is Step-Video-T2V?

Step-Video-T2V is a AI text-to-video model designed to transform written prompts into high-quality videos. With 30 billion parameters, it can generate videos up to 204 frames long.

The model uses a deep compression Variational Autoencoder (Video-VAE) to efficiently handle video data, achieving significant compression while maintaining excellent video quality. It supports both English and Chinese inputs, thanks to its bilingual text encoders. The model also employs advanced techniques to enhance video clarity and reduce artifacts, ensuring smooth and realistic outputs.

Introduction to Step-Video-T2V

Step-Video-T2V is a state-of-the-art text-to-video pre-trained model with 30 billion parameters, capable of generating videos up to 204 frames. It utilizes a deep compression Variational Autoencoder (Video-VAE) for efficient video generation, achieving 16x16 spatial and 8x temporal compression ratios while maintaining high video reconstruction quality.

Model Summary

The model employs two bilingual text encoders to process user prompts in both English and Chinese. A DiT with 3D full attention is used to transform input noise into latent frames, with text embeddings and timesteps as conditioning factors. The Video-DPO approach is applied to enhance visual quality by reducing artifacts.

Key Components

Video-VAE: Designed for video generation tasks, it achieves significant compression while ensuring exceptional video quality.

DiT w/ 3D Full Attention: Built on a 48-layer architecture, it incorporates advanced techniques like AdaLN-Single and QK-Norm for stability and performance.

Video-DPO: Utilizes human feedback to fine-tune the model, aligning generated content with human expectations for improved video quality.

Key Features of Step-Video-T2V

Advanced Video Generation

Step-Video-T2V can generate videos up to 204 frames using a powerful model with 30 billion parameters.

Efficient Compression

Utilizes Video-VAE for deep compression, achieving 16x16 spatial and 8x temporal compression ratios while maintaining high video quality.

Bilingual Text Processing

Processes user prompts in both English and Chinese with two bilingual text encoders.

Enhanced Visual Quality

Applies Video-DPO to reduce artifacts and improve the visual quality of generated videos.

State-of-the-Art Performance

Evaluated on the Step-Video-T2V-Eval benchmark, demonstrating superior text-to-video quality compared to other engines.

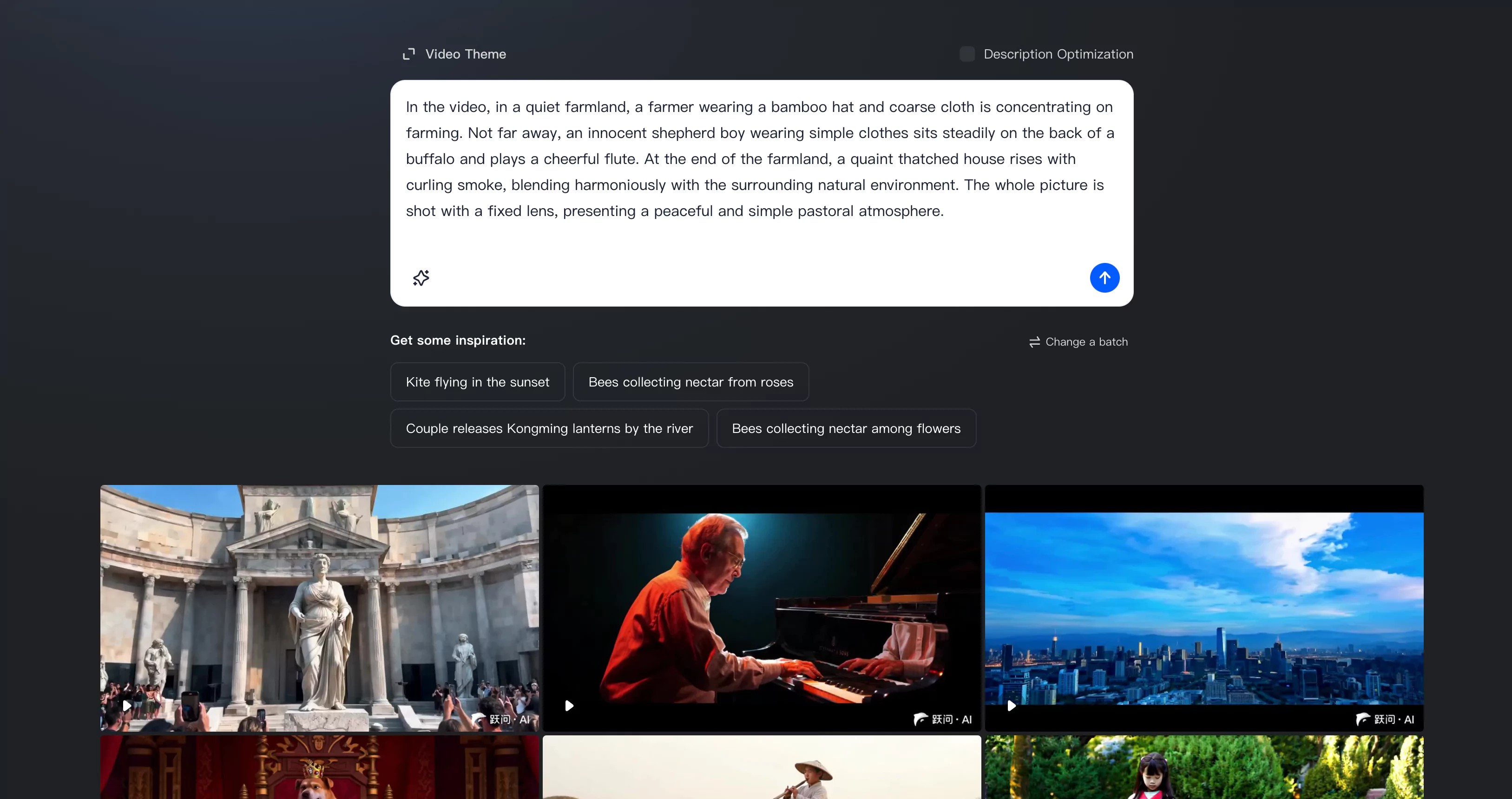

Examples of StepFun T2V in Action

1. Ancient Greek Statue

An ancient Greek statue standing on a square stone platform suddenly comes to life. She walks down the stone platform and waves to the audience around her. Everyone takes out their mobile phones to take pictures. The camera zooms in to give a close-up of the statue's head.

2. Peaceful Farmland Scene

In a quiet farmland, a farmer wearing a bamboo hat and coarse cloth is concentrating on farming. Not far away, an innocent shepherd boy wearing simple clothes sits steadily on the back of a buffalo and plays a cheerful flute. At the end of the farmland, a quaint thatched house rises with curling smoke, blending harmoniously with the surrounding natural environment. The whole picture is shot with a fixed lens, presenting a peaceful and simple pastoral atmosphere.

3. Blurred Character

The character is blurred and out of focus. It is the side profile of a girl with long black curly hair, a red beret, and a blue sweater, typing on a laptop.

4. Couple Walking Dog

A young couple is walking their dog on the Binjiang Trail in Shanghai. The girl is wearing a pink dress and the boy is wearing a white T-shirt and blue jeans. Their dog is a lively teddy bear with brown fur. The couple looks very happy. They chat and laugh from time to time while walking the dog. The sun shines on them, and the whole picture is full of warmth and happiness.

Pros and Cons

Pros

- State-of-the-art model

- High video quality

- Efficient compression ratios

- Bilingual text support

- Reduces video artifacts

Cons

- High GPU memory requirement

- Complex setup process

- Limited to CUDA GPUs

How to Use Step-Video-T2V

Step 1: Install Dependencies

Ensure Python >= 3.10, PyTorch >= 2.3-cu121, and CUDA Toolkit are installed. Use Anaconda for environment setup.

Step 2: Clone Repository

Run the following command to clone the Step-Video-T2V repository from GitHub:

git clone https://github.com/stepfun-ai/Step-Video-T2V.gitNavigate to the directory:

cd Step-Video-T2VStep 3: Set Up Environment

Create and activate a conda environment:

conda create -n stepvideo python=3.10

conda activate stepvideoInstall the necessary packages:

pip install -e .

pip install flash-attn --no-build-isolation ## flash-attn is optionalStep 4: Download Model

Download the Step-Video-T2V model from Huggingface or Modelscope for use in video generation.

Step 5: Multi-GPU Parallel Deployment

StepFun AI employed a decoupling strategy for the text encoder, VAE decoding, and DiT to optimize GPU resource utilization by DiT. As a result, a dedicated GPU is needed to handle the API services for the text encoder's embeddings and VAE decoding.

python api/call_remote_server.py --model_dir where_you_download_dir &This command will return the URL for both the caption API and the VAE API. Please use the returned URL in the following command.

Step 6: Run Inference

Execute the inference script with appropriate parameters to generate videos from text prompts.

Step 7: Optimize Settings

Adjust inference settings such as infer_steps, cfg_scale, and time_shift for optimal video quality.

Using StepFun AI to Create Videos

Step 1: Visit the Website

Navigate to the official StepFun AI video creation platform by visiting yuewen.cn/videos.

Step 2: Enter Your Prompt

Once on the website, locate the text input field. Enter your desired text prompt that you wish to transform into a video.

Step 3: Generate the Video

After entering your prompt, click on the "Generate" button to initiate the video creation process. The platform will process your input and generate a video based on your prompt.

Step 4: Login or Sign Up (if necessary)

If prompted, you may need to log in or sign up for an account to access the video generation features. Follow the on-screen instructions to complete the login or registration process.